Stop shackling your data-scientists: tap into the dark side of ML / AI models

Developing Artificial Intelligence and Machine Learning models comes with many challenges. One of those challenges is understanding why a model acts in a certain way. What’s really happening behind its ‘decision-making’ process? What causes unforeseen behavior in a model? To offer a suitable solution we must first understand the problem. Is it a bug in the code? A structural error within the model itself? Or, perhaps it’s a biased dataset? The solution can be anything from a simple fix for a logical error to expanding the model via complex design work.

ML as a ‘black box’

Machine Learning and Artificial intelligence are a black box. While we’re entering and receiving data, we seldom understand exactly why it’s making the decisions that it makes. Data scientists attempting to improve their model need the right data to do so. Even when working locally, in the lab (say in Jupyter notebook), the overall software environment masks the behavioral data of the ML model from the scientists, adding ridiculous and unneeded friction.

Said masking is significantly worse when models are deployed in staging or production environments, which are often exclusively managed by IT or engineering teams. Consequently, data scientists have no choice but to rely on those teams. This can be a very frustrating and resource-heavy process both in terms of time and money. Even though the required data may be right in front of scientists, there is no way to see or access it directly. Well, until now, that is.

We wanted to empower data scientists by reducing the opaqueness of Machine Learning models. Creating more visibility into those models would enable professionals to understand models better; making adding new features, developing new data dimensions, and improving model accuracy much easier. This is why we have just introduced our first ‘Instant Observability’ flow for machine learning, AI, and big data systems; supporting Apache Spark, Tensorflow, and more.

Instant observability into your model

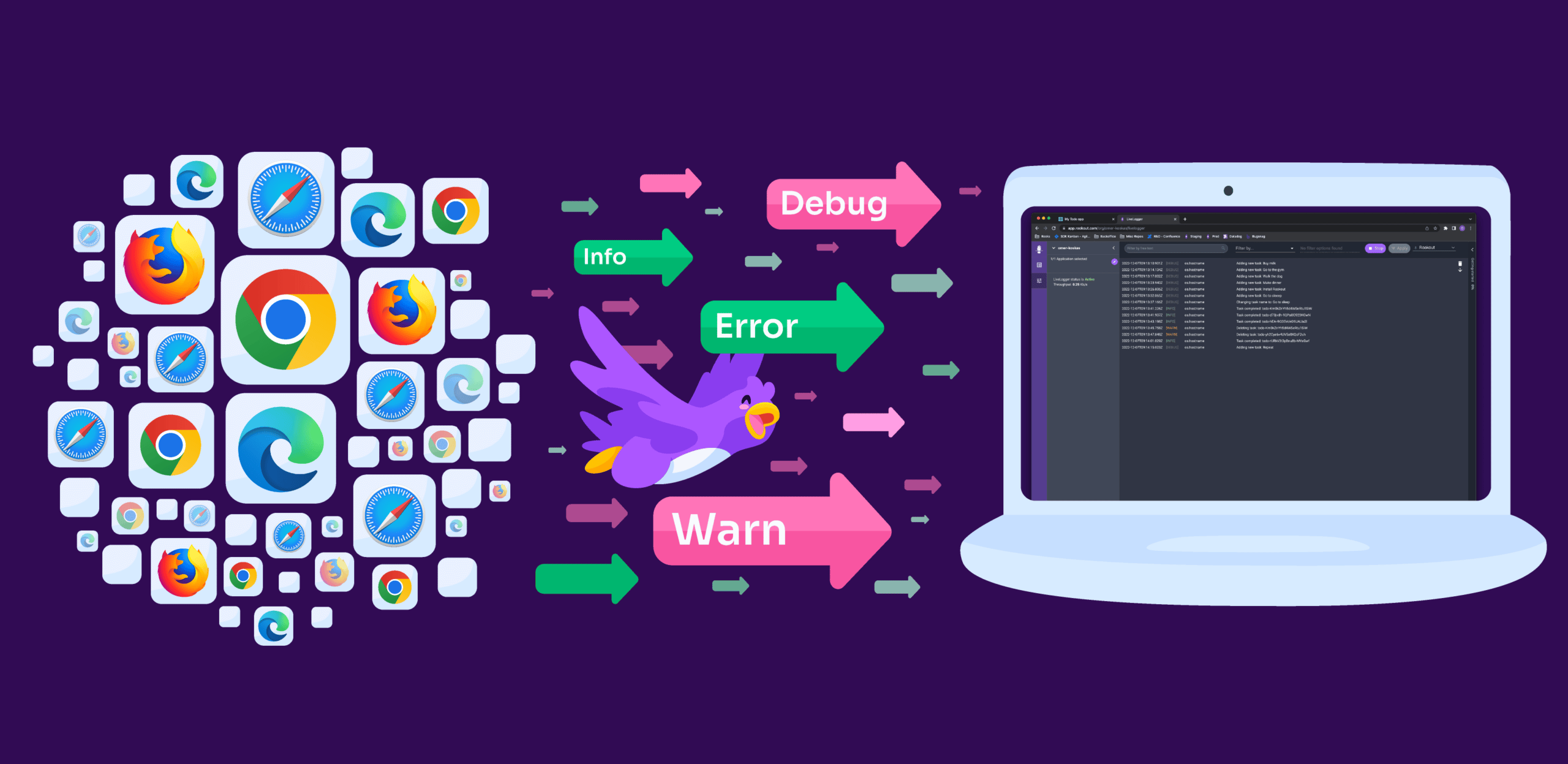

With Rookout’s new offering, data scientists can now observe their ML and AI models live in action at all stages of development. With this new capability, Machine Learning experts can get the data they need, regardless of whether the model is being trained, or if it’s running in the cloud or locally. A data scientist can use Rookout to debug in the lab, while the model is running and being trained, within Jupyter notebook, for instance, and then still get data after deployment and when the model is in production. Plus, there’s no need to add extra code, restart or redeploy. This way, data scientists can monitor, debug, iterate, and improve their models faster and more efficiently.

As you can see from the demo video above, professionals can now collect data-points in real-time, throughout the lifecycle of an ML model. Rookout makes model inputs, answers, and peripheral data accessible on-demand, on any platform. Now, when data scientists require data, they no longer have to request code changes from the IT and engineering teams or wait for the next release. They can simply use Rookout and observe in real-time as their models make decisions. These capabilities not only liberate data scientists but also free up backend engineers and CI/CD pipelines to focus on their own core work.

A new understanding

As we were working on this new tool, we’ve cooperated with several of our existing customers as beta design partners. One of these partners is Otonomo, the automotive data services platform. They use Spark for processing and analysis of their connected car user data. The company required a solution that would allow them to view every component of their distributed computing system running simultaneously. With Rookout, they can now quickly debug code that was previously hard-to-access, even as it runs in production.

Is there an easy way to understand the particular behavior of a Machine Learning model? Probably not. But with Rookout’s new feature and its non-breaking breakpoints, it is now possible to watch the behavior of ML models throughout their lifecycle. Data scientists can now iterate and improve their models much faster, without being slowed down by engineers and deployment cycles.